The problem to solve can be anything – from managing a complex bookkeeping database through mathematical problems to searching for extraterrestrial life. The SETI (Search for Extra-Terrestrial Intelligence) program is using shared computing to analyze the vast amount of data coming from telescopes and other sensors across the world. Users install a tiny program on their computers, which sets aside computing resources. It then works as a giant single computer to process data and signals from the outer space.

What Is Distributed Computing?

The very concept of shared computing exists since the first mainframe machines came to life in the 1960s. The first distributed systems comprised of a mainframe computer and multiple, sometimes tens and hundreds of terminals that were used to input data for processing. The first terminals were more of typewriters. However, in the 1970s, the emergence of microcomputers made it possible for these terminals to transform into functional workstations. Then, in the 1990s, the personal computer came to life. It made it possible to materialize viable distributed systems using client-server architecture with many tiers. Such distributed systems are quite powerful in terms of computing capabilities. This is because modern personal computers have the computing power of an early mainframe. Also, they connect tens and hundreds of such devices into a single computing system creating a very powerful tool to solve very very complex problems. That’s the practical retaliation of a distributed system. You now have an idea of the two main elements of a shared computing system.

What Are The Elements Of Distributed Computing?

As you can see, distributed systems need a central computing unit with many smaller computers connected to it and operating within a single framework. There is no problem to have a central computing unit that consists of several computers. However, they still need multiple other devices that provide computing resources for such a system to be considered a distributed computing system. Below, you can see a simple workstation server model of a distributed system. Such a system has three main components – a workstation, one or more servers, and a communication network. However, this distributed system lacks the computing resources to solve complex problems. However, it is still capable of running advanced software. Now, we move to a more advanced model of distributed systems – the processor model. In this model, we have a network that connects servers and workstations/terminals. After that, they can have their processing power to share over the network. This is a simplistic model. Moreover, you can have more tiers of communication and networking based on this model. A cloud-based service might well be using a similar model to process data and handle service requests. The only problem with such a model is that you need a critical number of workstations running at any given time for your system to be operational. But there are more challenges before distributed systems than connecting and operating a specific number of computers at once.

Distributed Computing Software Challenges

On the surface, you get increasing computing power into a distributed system by just adding more units to the system. But it can only be true for a completely homogenous system. It comprises of devices made the same way, using the same technology and the same programming language. It is rarely the case with most real-life systems. The modern concept for distributed computing overcomes the main challenge before shared computing by introducing structured communication. This makes it possible for devices to communicate at a higher level. It works until you face two other problems – location independence and component redeployment.

Location Independence And Component Redeployment

Software distributed over a network should work independently of a component location. Any connected device should use the same programming model for the whole system to work as expected. There’s a challenge for the requirement for flexible component deployment and redeployment. You need to be able to add new nodes. Also, you can upgrade existing ones without re-writing the programming code or shutting down the entire system. Engineers and software developers can solve these two major problems. They do these by implementing dedicated middleware that acts as distribution infrastructure software. This software connects an application with the respective operating system, network, or database. There are various types of models and types of distributed computing software middleware to deal with particular challenges of implementing a distributed system. Furthermore, the details are too technical to appeal to a non-geeky reader. The more important thing is that these models work. You can see an example of such a system on the chart below: By using such a distributed computing software model, you can run calculation-intensive applications. You can do this by sharing data intended for processing between multiple computers while using the Internet as middleware. This software framework eliminates problems arising from different operating systems such as Windows and Linux. At the same time, it makes both a MacOS computer and Windows-based PC an integral part of a distributed computing system. Moreover, you can easily scale such a system.

Scalability Of Distributed Computing

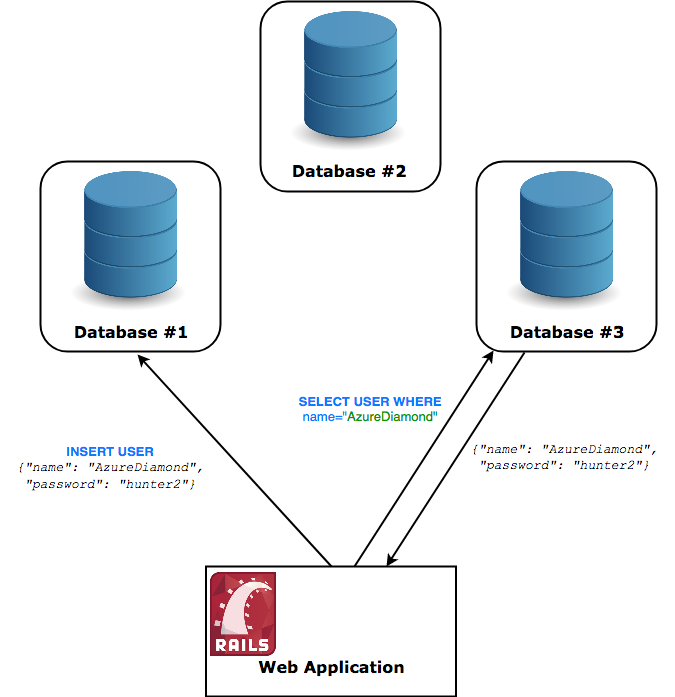

There are two approaches to scalability. You either upgrade your existing hardware to match and have the best capabilities money can buy. Also, you can extend your system horizontally by adding new hardware to an existing networked system. You should also be aware that distributed computing refers not only to systems where all components are actively processing data that is to have computer processing units embedded into them. Distributed computing refers also to systems that process data from multiple databases, for example. Of course, such a system represents a very basic model of interaction. However, it’s a distributed system from an IT point of view. Here is how it looks like: In this example, your distributed system will need one or more servers to connect the web application to the databases. It will then process the data exchanged between the app and one or more of the databases in place.

Upgrading The System

As the records in the databases grow and your web app gets more end-users, the server should deal with increasing number or service request and handle more data in the process. In turn, it will require you to upgrade the system to deal with these increasing computing demands. So, you can upgrade your server to the best technology available on the market and cope with the increasing loads. But technology, both hardware and software, has its limits, too. You cannot upgrade vertically without reaching a certain limit. However, it usually depends on the currently available technology. Therefore, you need to scale up horizontally. You do this by adding more computing resources. It might use older technology but will produce better results by utilizing the combined resources of multiple machines. This system for shared computing will not be as powerful as the sum of its parts. However, you can scale it up almost indefinitely.

Advantages Of Distributed Computing Systems

Whether you are using a server-workstation model or grid computing where microcomputers work in synchrony and parallel with each other, you end up with a very scalable and fault-resistant system. You need not update a distributed system now and then when workloads increase. You just add another module to handle the increasing workload. And you need not worry if one machine crashes as the system will still be operational despite its capacity being reduced. Distributed computing software also takes advantage of the physically closest network node for transferring data and making computations, which in turn results in better overall performance compared to traditional networked systems. Furthermore, provided that you have proper software in place, you can divide complex computing tasks into smaller ones and then assign multiple computers to work on them in parallel. This approach markedly reduces the time required to solve a similar computation on a single machine. The downsides of distributed computing are associated mainly with the high initial cost to deploy such systems and manage them to an acceptable security level.

Distributed Computing System: A Sustainable Model

The distributed computing concept is still to unveil its full potential as technologies such as the Internet of Things evolve and mature. We can connect multiple computers to work in parallel, but the IoT networks have the potential to add growing computing power to even a home PC. Utilizing the idle computing resources of a connected home full of smart devices can make your home PC perform as fast as the latest enterprise-grade computer available on the market, for example. The concept of distributed computing also proves to be a sustainable model for supporting always-connected systems such as the apps we use for checking the weather forecast or work on a document remotely. Such systems need to utilize shared computing resources to reduce latency and secure service availability at all times. Last but not least, the distributed computing system enables us to perform the computations we need to run complex systems and software apps by utilizing all the free computing resources available at any given moment.